USA.gov Uses Human-Centered Design to Roll Out AI Chatbot

Overview

To improve customer service and give better answers to users of the USA.gov website, the Technology Transformation and Services team at General Services Administration (GSA) created a chatbot using artificial intelligence (AI) and automation.

Source

Details

Originally published June 7, 2019

Scammers target millions of Americans every year and victims report losing more than $1 billion to various types of scams. People regularly visit US government websites and social media feeds for help with distinguishing scams from legitimate transactions, reporting scams, and determining whether lost money can be recovered.

To improve customer service and give better answers to users of the USA.gov website, the Technology Transformation and Services team at General Services Administration (GSA) created a chatbot using artificial intelligence (AI) and automation. As an addition to the USA.gov call center and its team of staff that answers questions from the public, this beta project holds great potential for the public to get the help they need with scams.

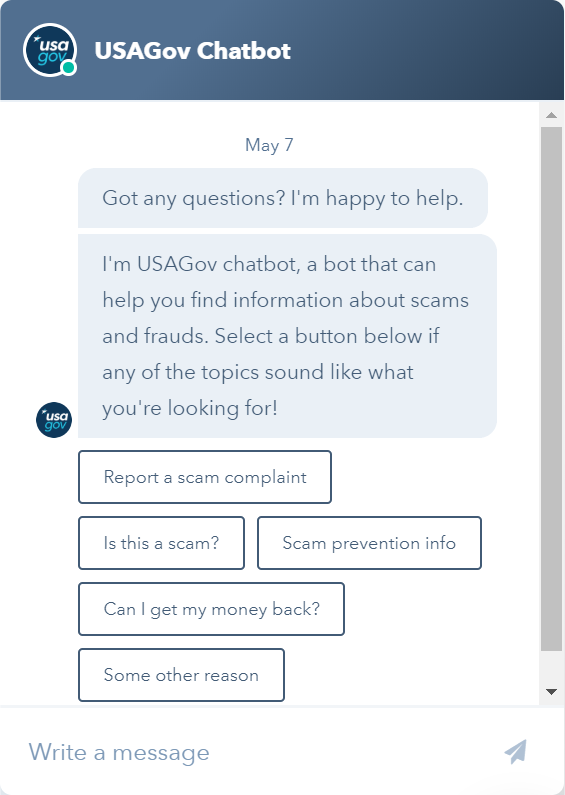

USA.gov’s chatbot initiates an interaction with a user.

How AI can help with the mission

A team led by Jessica Milcetich in the Technology Transformation Services at GSA was charged with improving website content on scams for users. They did not start the scams work thinking AI would be the solution, but after talking to their users and hearing some of their challenges, they realized that AI might help. It seemed like a bot that could easily walk a user through redressing a challenge might work for the particular purpose of helping users navigate information about scams. The team wanted to explore AI because they wanted to see if automation would make it easier and more efficient to get users the answers to their questions.

One of the greatest advantages of AI is that it facilitates decision-making by making the process faster and smarter. At the same time, machines, including chat bots, can continuously perform the same task without getting bored or tired and produce relatively consistent outcomes compared to humans.

Users come first

The team started with research, aiming to understand the people who might be using their planned chatbot. They recruited 32 people who had previously contacted USA.gov staff about scams either on Facebook or through the call center. Participants were asked to share their experiences with scams, their emotions, and what made them decide to file a report.

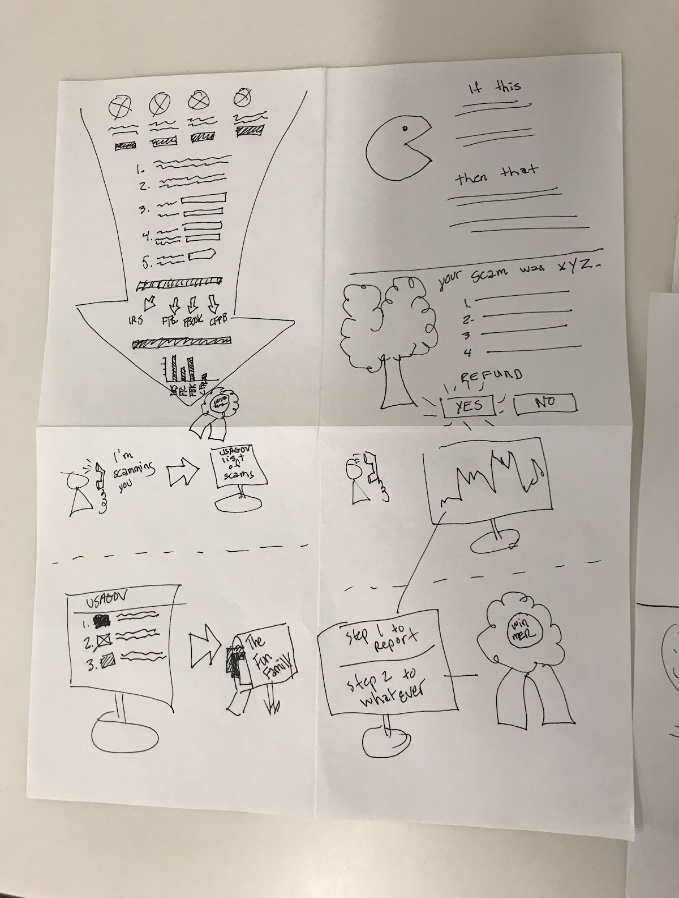

The team generated ideas then grouped them into categories to help organize possible next steps.

The team held a workshop to identify how participants’ feedback could be grouped by themes, turned into actionable items, and prioritized. The user-centered approach helped add specificity to the team’s initial problem statement. They confirmed that users struggled with reporting scams generally. However, unlike the initial hypothesis, users could already navigate guidance easily once they knew that the Federal Trade Commission (FTC) was the right authority to respond to scam complaints. Rather than help with complaint paperwork, users wanted help identifying FTC as the proper responding agency in the first place.

In addition to difficulties identifying where to report scams, the interviewees wondered how to identify a scam and whether they could recover money they had lost in a scam.

The team held a second workshop to generate and rank potential solutions. They concluded that a personalized experience might provide better outcomes for users, which led to the idea that later became a friendly chatbot living on three of the USA.gov webpages about scams (with plans to expand later).

Maximize the technology you have

In the beginning, the team found a tool recommended by other government agencies and the private sector. The company decided not to go through the GSA approval process, however. Looking for easier-to-deploy options, the team learned that their marketing platform, HubSpot, offered chatbot building capability. With an already existing ATO1 and a user-friendly tool that followed a simple if-then logic system, the team was able to create a test bot, which they deployed to the website in February 2019.

A month after launch, the chatbot had interacted with over 4,000 users. Seventy-eight percent of them successfully completed a task: asked a question and received a satisfactory answer.

On the back end, the team can see aggregated data about user interaction, including where they drop off in the logic path, which provides valuable information for further refining the bot.

The case for decision trees

The chatbot uses a question-answer technology. The team started with this approach because the setup was more straightforward than one involving processing user-entered free-text. Furthermore, starting with preset options presented a good way to test whether questions generated from user research would match the live user interaction. Down the road, findings from this phase will help provide information about how to map free text to general topics.

By intentionally limiting the scope of the bot to preset topics within the scam remediation themes, the team had better control over users’ experience interacting with the bot.

The team mapped out different user scenarios.

While the chatbot is still in beta, the USA.gov team has big plans for the future. The bot will tackle a wider range of topics, accept free text entries and respond in kind, analyze user satisfaction, and integrate with the existing contact center.

The team took a similar approach to developing theSpanish-speaking version of the bot. Going forward, they hope to identify what exact questions resonate with the Spanish-speaking audience. Based on their experience with other topics on the site, they expect some similarities, but also key differences that will require developing new logic flows.

Data and AI in your agency

Modern technology is often lauded for its ability to handle large volumes of unstructured data. Designing and rolling out the USAGov chatbot, however, shows that AI can support great customer experience and uncover useful insights without diving into the world of Big Data. Through careful planning and interactions with real users, the USA.gov team realized that relatively limited interactions with preset logic trees would provide exactly the sort of data needed to support their users and lead to further improvements down the line.

Postscript

Jessica Milcetich is the product owner of USA.gov and USA.gov en Español. To learn more about this project, check it out “Breaking into Artificial Intelligence: Meet Our USAGov ChatBot!” or contact Jessica at jessica.milcetich@gsa.gov.

Footnotes

To receive updates on activities related to the Federal Data Strategy, please sign up for the newsletter.

The Federal Data Strategy Incubator Project

The Incubator Project helps federal data practitioners think through how to improve government services, enabling the public to get the most out of federal data. This Proof Point and others will highlight the many successes and challenges data innovators face every day, revealing valuable lessons learned to share with data practitioners throughout government.

-

ATO, or Authority To Operate, is the necessary security stamp of approval that a software must earn before agency users may work with it. ↩