U.S. Data Federation

Overview

The United States Data Federation is dedicated to making it easier to collect, combine, and exchange data across government through reusable tools and repeatable processes.

Details

Project No Longer Active

This page serves as an archive of the work performed in the past.

Getting good, reliable data is hard. Getting data from different sources makes this even harder.

There are a number of things that make this more difficult: Aligning incentives across organizational boundaries, specifying data standards, ensuring integrity in the data collection process, implementing reliable data validation processes, and more. These are the challenges federated data efforts face.

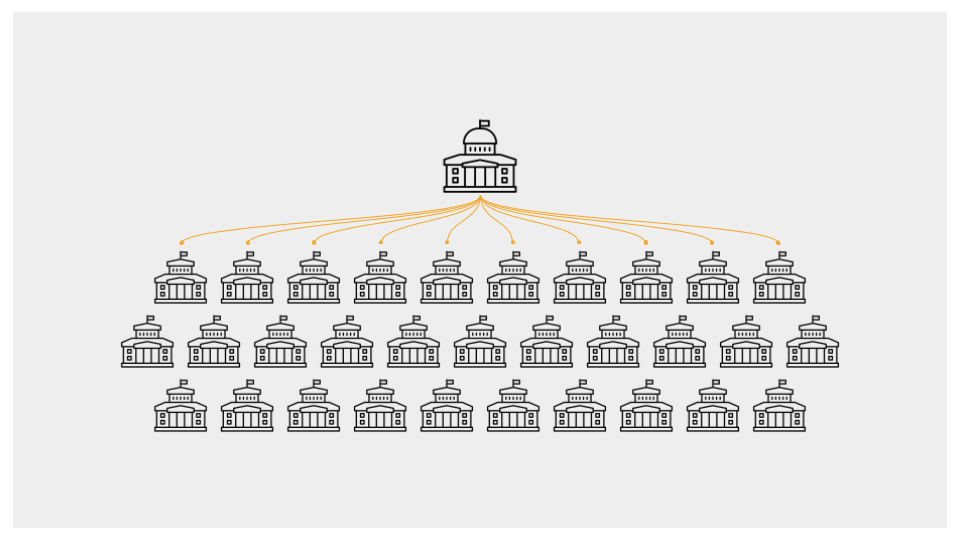

A federated data effort is any project or process in which a common type of data is collected or exchanged across disparate organizational boundaries.

In our distributed style of government, this happens all the time, and it is critical to the success of many programs and services. Federated data efforts happen at every level; whether it’s federal agencies exchanging data, federal agencies collecting data from states, or states aggregating data from local entities like city governments, school districts, or transportation authorities.

These data are being used in many ways. They may inform inform policy, support operational efficiencies, or be published in aggregate form for other data users. for example—but they all have the same issue. Regardless, it’s a challenge to reduce the burden on data providers while still maintaining quality, accuracy, and completeness.

These data are being used in many ways. They may inform inform policy, support operational efficiencies, or be published in aggregate form for other data users. for example—but they all have the same issue. Regardless, it’s a challenge to reduce the burden on data providers while still maintaining quality, accuracy, and completeness.

Federated data efforts don’t have adequate infrastructural support.

We believe this type of data sharing effort has not been given the systemic investigation and infrastructural support it deserves. Despite the fact that efforts like these are becoming more frequent, each new effort is still improvising solutions in terms of processes, tooling, and compliance infrastructure, with little sharing of lessons from one effort to the next. That’s where the U.S. Data Federation comes in.

Our goal is to make it easier to manage federated data efforts by developing reusable tools and sharing repeatable processes.

The best practices and resources are intended to include guides and repeatable processes around data governance, organizational coordination, and standards development in federated environments. The reusable tools are intended to include capabilities around data validation, automated aggregation, and the development and documentation of data specifications.

About the U.S. Data Federation Project

The United States Data Federation is a project focused on making it easier to collect, combine, and exchange data from disparate sources.

The problem

Federal agencies often need to collect data from state and local governments, other federal agencies, and other data providers, which are used to support policy or budget decisions, operational efficiencies, or published in aggregate form for other data users. Making it easy and convenient for data providers to submit their data while maintaining quality, accuracy, and completeness can often be a challenge.

About the U.S. Data Federation Project

The U.S. Data Federation Project is an initiative of the GSA Technology Transformation Services (TTS) 10x program, which funds technology-focused ideas from federal employees with an aim to improve the experience all people have with our government. The goal of the project is to document repeatable processes, develop reusable tooling, and curate resources to support federated data projects, making it easier for data providers to submit interoperable, high quality data to federal agencies. We define a federated data project as an effort in which a common type of data is collected or exchanged across complex, disparate organizational boundaries. This type of data management effort is common in our distributed style of government, but currently most such efforts are undertaken in isolation and lack infrastructural support or repeatable best practices.

Our work

In Phase 1, we interviewed a variety of distributed data management projects and synthesized findings in a Data Federation Framework. Our findings suggested that reusable tooling and processes would benefit future federated data efforts.

In Phase 2, we partnered with the USDA Food & Nutrition Service and built a functional prototype of a reusable data validation tool that allows users to submit data via a web interface or API to be validated against a set of customizable rules in real time. Read more about that collaboration on the 18F blog.

In Phase 3, we are working to develop a software and service offering to support federated data efforts by

- continuing to engage with data practitioners to discover common needs and repeatable processes;

- refining and expanding the prototype tool developed in Phase 2; and

- validating the tool and other potential reusable tools with additional use cases.

The best practices and resources are intended to include guides and repeatable processes around data governance, organizational coordination, and standards development in federated environments. The reusable tools are intended to include capabilities around data validation, automated aggregation, and the development and documentation of data specifications.

Get in touch!

As we develop reusable tools and resources, we want to know what you would benefit from. A community of practice? A how-to guide on engaging stakeholders? A tool for data aggregation or validation?

If you’re interested, please get in touch.

The U.S. Data Federation Framework

This is the alpha version of this framework.

Summary

This project is a partnership between GSA’s 18F and Office of Products and Platforms (OPP). We define a data federation as an effort where a certain type of specifiable data is collected across complex disparate organizational boundaries. This could be, for example, when the federal government collects data from states, or states from municipalities, or even when the Office of Management and Budget collects data systematically from many federal agencies. This type of data sharing effort is common in our distributed style of government, but we believe it has not been given the systemic investigation and infrastructural support it deserves — currently each such effort is treated as an isolated instance, with little sharing of tools or lessons from one effort to the next.

The goal of the U.S. Data Federation project is to fill that gap, providing a common language and framework for understanding these efforts, and (in the future) a toolkit for accelerating the implementation of these efforts.

What we did

We interviewed twelve leaders of federated data projects, and seven additional experts from government, academia, and the private sector who have been influential in shaping or understanding these efforts. We developed a maturity model for these efforts in along four axes: Impetus, Community, Specification, and Application. We found that the most successful efforts are those in which all four of these axes are developed iteratively and simultaneously. We also developed a playbook of common ways to ensure these efforts are successful.

Projects we interviewed:

- DATA ACT, a 2014 law mandating expanded and standardized reporting of financial data for federal agencies.

- data.gov.ie, the open data port for Ireland.

- data.gov, the open data portal for the United States.

- code.gov, a searchable directory of open source code made public by the federal government.

- Voting Information Project helps voters find information about their elections with collaborative, open-source tools.

- Open Civic Data, an effort to define common schemas and provide tools for gathering information on government organizations, people, legislation, and events.

- opendataphilly.org, the open data portal for Philadelphia.

- OpenReferral, dedicated to developing open standards and platforms for making it easy to share and find information related to community resources.

- Open311, A collaborative model and open standard for civic issue tracking.

- National Information Exchange Model (NIEM), a common vocabulary that enables efficient information exchange across diverse public and private organizations. NIEM can save time and money by providing consistent, reusable data terms and definitions, and repeatable processes.

- Federal Geographic Data Committee, an organized structure of Federal geospatial professionals and constituents that provide executive, managerial, and advisory direction and oversight for geospatial decisions and initiatives across the Federal government.

We also researched the General Transit Feed Specification.

Other experts we spoke with:

- Rachel Bloom, who researched open data standard adoption for her thesis. Currently at GeoThink.

- Andrew Nicklin, Director of Data Practices at Johns Hopkins Center for Government Excellence

- James McKinney, Senior Data Standard Specialist at Open Contracting Partnership

- Data Coalition, who advocates on behalf of the private sector and the public interest for the publication of government information as standardized, machine-readable data.

- Open Data Institute, which connects, equips and inspires people around the world to innovate with data.

- Mark Headd, current 18F acquisitions specialist and former Philadelphia CDO.

- Wo Chang, Co-chair of the NIST Big Data Working Group.

- Tyler Kleykamp, Chief Data Officer of Connecticut, who runs the Connecticut Open Data Portal and maintains this list of datasets states must report to the Federal government.

Interviews ranged from 30-45 minutes. For project leaders I asked them (time permitting):

- What is this effort, in your own words?

- What was the impetus or driving force behind this effort?

- In building this effort, what were the biggest challenges, and what went smoothly?

- What tools and technologies were used for this effort?

- Why did you choose this architecture or process?

- What were the political and organizational dynamics of collecting this data?

- Who were the relevant stakeholders for this effort, and how were they convened?

- Is there anyone else we should speak with to better understand this effort?

When interviewing experts, the interviews took a looser structure but we generally tried to ask them:

- What experience do you have that might be relevant to a federated data effort?

- What efforts are you aware of that fit into this category?

- Do you have any contacts in those efforts we could reach out to?

- What do you think are the primary challenges of federated data efforts?

We took notes directly in a public github issue, and submitted it upon completion of the interview, sending it to the participants for review. You can view those raw notes here.

We have also compiled a summary of all projects interviewed.

Our analysis consisted of analyzing our interview notes and identifying common themes and patterns, presented in two forms: a Maturity Model and Playbook. The maturity model identifies four principal dimensions with which to gauge a federated effort, and three phases of maturity for each dimension. The playbook consists of a set of actionable plays which have proven helpful to previous efforts.

The Data Federation Maturity Model

Successful execution of a federated data effort is largely a question of incentives and resources. Developing, and complying with, a new process or specification for data submission takes considerable time, effort, and expertise, and will only be possible to sustain with a large number of motivated individuals who have both the ability and the capacity to execute on a long term vision. However, it is difficult, and unwise, to immediately allocate vast resources to a new federated data effort — the effort may be easier than anticipated, or harder, or impossible, for a variety of reasons. We have observed that the most successful of these efforts simultaneously and iteratively develop the maturity of the effort along four axes: Impetus, Community, Specification, and Application. If done properly, these four dimensions work in concert to create a virtuous cycle of more participation, enthusiasm, and resources allocated to the project over time.

| Dimensions | Beginnings | Growth | Mature |

|---|---|---|---|

| Impetus | Grassroots | Policy | Law |

| Community | First Adopters | Trending | Maintenance |

| Specification | Dictionary | Machine Readable | Standardized |

| Application | Alpha | Beta | Production |

Impetus

The business or legal need that sets the project in motion

The first spark behind any federated data effort is some sort of impetus. This might be a shared understanding of a problem, where a grassroots community comes together and decides to act (for example, with the law enforcement community creating NIEM). Or it could be a policy, where a central authority (e.g., OMB, or a state government) decides to require compliance of a certain sort through an official policy (for example, the OMB M-13-13 policy with Data.gov). Or, it could be as formal as a law, such as in the case of the DATA Act. The more formal the mechanism, the greater force permanence it has, and thus the harder it is to adapt during implementation.

For both policies and laws, it is critical to leave all technical decision making to the implementation team. Never specify a technical standard in policy or law. For example, it can be very helpful to dictate that a standard must be machine readable, but specifying that the standard must be written in XML can have complex and unforeseen consequences. In extreme cases you can specify data elements to provide, but it is best to avoid doing so. In writing a policy or law, focus on processes and outcomes, not on implementation details. Never attempt to fully dictate a data standard in policy or law. For example, you can say that the standard must be machine readable, extensible, and developed alongside a web application with user feedback, but it would not be helpful to say that the standard should be in XML, that states can modify as needed, and that it must be showcased in a user-friendly web application. It’s also important to recognize that data standards need maintenance and adaptation over time: it’s wise to specify an annual or biannual review of the standard itself to make sure the data is still providing value.

Community

The people who provide and consume the data

For a federated data effort, you have two communities you need to keep happy: the data owners, or those who need to do the work to adopt the standard, and the community of users, or consumers of the aggregated data. It is the job of the project team (a small team dedicated to driving adoption) to keep this community excited and encouraged.

When executing on a federated data effort, it’s important to not expect or plan for immediate compliance of the entire community of data owners. Instead, select 2-3 early adopters: good-faith partners who are excited about helping. One partner is not enough to generalize on, and over 3 partners would begin to overburden the project team. Once those early adopters have been identified, help them implement a draft of the standard in a high touch fashion. This means working with them side by side to implement it, or even have the project team implement it themselves in order to demonstrate feasibility and be able to show them how it works. The project team’s relationship with the early adopters is critical and bidirectional: the implementation details and the standard itself needs to be adapted to be user friendly for data owners, and those owners must also learn about why the standard is helpful to a broader audience.

Typically data that is being collected is already in use by the communities most relevant to the data owners, and it requires a shift in mindset to invest in making it more broadly available. For example, for the Voting Information Project, counties typically already publish their polling locations and ballot information on their website. Without a standard reporting format, however, it was nearly impossible for citizens to find. Thus, the project team was responsible for conveying to the owners that problem of scale, and helping them understand the standard. And for the DATA Act, for example, the project team quickly realized that agencies were most comfortable working with CSV (comma separated value) format. Rather than try to teach them all to adhere to the XML-style format the machine readable specification was written in, they developed a parallel standard for the CSV format.

Once the early adopters have had success, it’s time to roll out the larger standard. Since the first-mover risk has been absorbed, typically the effort will start trending in the larger community, who now has use cases to point to, people to talk with, and code to look at to help them comply. Once adoption is complete or plateaus, the standard enters the maintenance phase, where ownership problems begin to be salient. Often the trending phase is accompanied by influxes of excitement, talent, press, and funding. After a few years all of those may lessen, and it becomes important to establish long term mechanisms for maintaining the standard itself and the processes around compliance. If the standard has become a normal part of operations, the maintenance phase can be part of the normal budget. If it continues to be “tacked on” to normal operations, the effort is at risk of fizzling out.

It is also important to be in touch with the community of data consumers from the very beginning. This could be journalists, scientists, citizens, decision makers in the org, etc.. The reason for this is two-fold: first, as the ultimate users of the data, it is important that their feedback be integrated early and often. Second, their positive feedback and involvement will help incentivize the data owners, who are embarking on a long and uncertain journey.

Specification

The definition of what data needs to be provided and what format it should be in

The specification itself is responsible for communicating to data owners what data needs to be provided and how it should be formatted. At the lowest level of formality, project teams can start with a description of fields (a data dictionary). These should be detailed, should not use acronyms, should include exact field / column names, and include examples of compliant data. If you have a simple data standard, for example a single CSV or sets of CSVs, this level of detail may be sufficient. For example, the General Transit Feed Specification, which is among the most successful federated data efforts, does not publish a machine readable standard, but rather has thorough documentation detailing the requirements and fields in language the domain experts in transit agencies would understand.

A more formal specification should be machine readable: in this case, not only is the data dictionary well documented as detailed above, but the specification itself can be used to automatically perform simple validations against the data. This level of specificity can help reduce compliance burden by increasing clarity. For example, a data dictionary might have a field called “start_date” and describe that it’s the starting date of an election, but a machine readable version would be forced to clarify that the format of the date should look like 2018-01-15, which reduces potential for wasteful back & forth or downstream data integrity problems. For specifications that must map to many-to-one or many-to-many relationships, a machine readable format (e.g., JSON Schema or XML Schema) is strongly preferred, even as a first iteration. That machine readable schema can be versioned as well.

Over many years of stability and adoption, it may make sense to promote the machine readable schema into a full standard, recognized by a formal standards body, for example. This level of maturity is rare, but can be helpful to promote stability. Working with standards bodies is generally a complex endeavor, and thus not recommended until the standard has a proven user base and community.

Application

A software application optimized for demonstrating and providing the value of the data to end users

It is only very rare cases where raw data published online will be compelling enough to drive adoption of the user community. Generally that community will have richer requirements beyond and supporting the data itself. For example, they may want to be able to search it or visualize it easily, or access it programmatically through an Application Programming Interface (API). Since that user community is ultimately driving value for the entire effort, it’s important to be developing an application in concert with the standard to demonstrate the value of the data. For example, with code.gov, the project team developed an alpha version of code.gov in just a few months, showcasing the work of some of the early adopters. They quickly earned over 100,000 viewers, which helped ignite excitement for the effort across government, and provide countless valuable perspectives on how the data would be used and what, exactly, the value was they should be targeting.

Often for federated data efforts, the value proposition itself is not fully known, but rather hypothesized: developing a “killer app” that showcases that value directly is a critical part of the effort. Similarly, the application helps inform the specification itself: perhaps some fields are found to be unnecessary, or incorrectly formatted, or missing. This application provides important fuel for incentivizing adoption. For example, for the General Transit Feed Specification, having local citizens be able to search through their city’s public transit routes on Google Maps provided clear and tremendous value to potential adopters. As is industry best-practice, it’s important to develop this application iteratively, starting with a small subset of features, releasing publicly to early adopters in a alpha phase, performing more extensive testing in a beta phase, and adding increased stability in the production phase.

The Data Federation Playbook

The following nine plays are drawn from our interviews with successful federated data efforts. They are intended to provide highlights of what project teams recall as being essential to the success of their project. It’s unlikely that any effort will be able to execute on all plays, but if you are undertaking a new federated data effort, it will greatly improve your chance of success if you have a few of these plays in motion.

Policy Should Be Focused on Processes and Outcomes, Not Implementation: The details of implementing an open data standard must be able to be adapted by project teams on the fly to meet the needs of the owner and user communities. Don’t specify technical details in policy or law, instead focus on goals and processes used to achieve those goals (e.g., developed iteratively with user feedback, or must develop a machine-readable data standard).

Identify Use Cases With Demonstrated Demand: When prioritizing whether or not to invest in a federated data effort, seek out ways to prove demand. Are citizens or media frequently requesting a certain type of data? Is there already a community built around fixing or cleaning up a certain type of data? Talk with your call centers and local communities. For example, with the Voting Information Project, Google reached out to the public sector because they had data indicating that people were searching for basic polling place and ballot data, but not finding it.

Develop a Killer App: If your hypothesis is that your data is useful, do the work to build out the first use case and demonstrate that value. This will help win over the hearts and minds of participants. If you can’t think of a single use case for your data, it might make sense to do more outreach with relevant communities to refine the value proposition before undertaking a larger effort. For example, the General Transit Feed Specification has become widely and enthusiastically adopted since it allows an agency’s transit data to be displayed in Google Maps.

Allocate Proper Resources: Throwing money at data owners without any structure will likely not get great results, but adding financial support for training, obtaining new software, and high-touch onboarding is very helpful. For example, data.gov.ie has an “open data unit” of 3-4 people whose sole purpose is to provide high-touch assistance to departments who want to provide data but need help navigating technical or procedural issues. And when the state of Connecticut wanted to normalize the financial data of its municipalities, it provided grants for obtaining new software and assisted with training, which built up goodwill and was of significant practical importance.

Implementation Should be Driven by a Single Empowered Team: It’s important to be able to deliver iterations on the application and specification quickly in order to build trust and momentum. Do not attempt to split responsibilities (e.g., the specification maintained by one department, and the application by another).

When possible, deliver value directly to data owners: The teams responsible for complying with the policy bear the vast majority of the burden, yet often receive little benefit. The long term success of these efforts are often contingent on delivering value directly to these data owners. For example, in the state of Connecticut, municipalities readily submit their data to the open data portal, since it is the easiest way to publish and share data from one department to another, which is an operational necessity. And for the Philadelphia Open Data Portal, newly published data gets publicity in the form of a blog post, and gets visualizations layered on top of it. Government employees are very motivated by public service, and will be delighted to comply if they see the public engaging fruitfully with the data.

Start With Simple Technologies: Just because a project might have tremendous value, or be complex organizationally, doesn’t mean it needs to be complex at the technical level. For the DATA Act, for example, they found CSVs were the easiest format for owners to comply with, and also very easy to ingest and validate. The system behind the DATA Act, which was built on time for 1/10th of the CBO cost estimate, is architecturally just a simple web application.

Nurture Early Adopters: Identify 2-3 early adopters, and work side by side with them to comply with a draft of the specification. Their success is critical to adoption — they will become much more convincing evangelists of your effort than you can be. They’re also critical from a practical standpoint to allow for proper feedback and generalization, provide a forum to discuss challenges, and demonstrate technical feasibility.

Support Compliance Tooling: In order to lessen the burden on data owners, it’s important to do everything you can to help them compile and validate their data. For example, data.gov provides inventory.data.gov, a metadata inventory tool for agencies that easily exports the metadata to the required format. It also provides an online tool for validating a data.json file adheres to the specified format. Publicly accessible, human readable documentation is also a critical part of the success of these efforts.

Next steps

Due to its decentralized nature, the U.S. Government often undertakes complex federated data efforts. It’s time to collaborate more on what works, what doesn’t, and ways to lower capital investment for these efforts in the future by sharing tooling and other resources. We hope to be shortly beginning a new phase of this project focused on identifying and building common tooling and answering critical questions around the long term ownership and maintenance of federated data efforts.

Helpful resources

- JSON Schema

- Understanding JSON Schema (ebook)

- XML data types

- Table Schema

- CKAN

- datastandards.directory

- State-Federal Datasets

Tools

The U.S. Data Federation is curating and creating reusable tools to support data validation, automated aggregation, and the development and documentation of data specifications.

ReVAL - Reusable Validation & Aggregation Library

The first tool prototyped through this effort is a reusable Django App for validating and aggregating data via API and web interface.

For the web interface, it can manage data submitted as file uploads to a central gathering point, and it can perform data validation, basic change tracking and duplicate file handling. Each file generally contains multiple data rows, and each user may submit multiple files.

For the API, it can perform data validation, and view uploads that were done via the web interface.

Learn more about the tool and track its development on GitHub

Features

- Flexible input format

- Validation with:

- goodtables

- JSON Logic

- SQL

- JSON Schema

- a custom validation class

- Row-by-row feedback on validation results

- Manage and track status of data submissions

- Re-submit previous submissions

- Flexible ultimate destination for data

- API for validation

Requirements

- Python (3.5, 3.6)

- Django (1.11)

- Goodtables

pyyamldjangorestframeworkpsycopg2json_logic_qubitdj-database-urlrequests

Suceess Stories

- GSA 18F - Saving time and improving data quality for the National School Lunch & Breakfast Program (link)